Introduction

Children today use digital services from a very young age — games, learning apps, social platforms, and everything in between.

All these services collect personal data.

And under the DPDP Act, a child cannot give valid consent for this processing. Their personal data cannot be used unless an adult — the parent or lawful guardian — steps in to authorise it.

This is where the question becomes critical: how does a platform confirm that the person giving permission is genuinely the child’s parent and an actual adult?

The DPDP Rule 10, answers this clearly.

Rule 10 explains exactly how Data Fiduciaries must collect verifiable parental consent before processing a child’s personal data.

Let’s break it down in simple, direct terms.

Rule 10: The Operational Blueprint for Child Data Verification

Rule 10 gives the step-by-step process to comply with Section 9(1) of the DPDP Act, which says:

No processing of a child’s personal data is allowed without verifiable consent from the parent or lawful guardian.

In simple terms: no parent, no processing — think of it as the digital equivalent of “You can’t go on the school trip without a signed permission slip.

Here is the detailed breakdown.

1. Mandatory Verification Obligation (Rule 10(1)(a))

Before processing a child’s personal data, the Data Fiduciary must verify:

- The person giving consent is an Adult (18+).

- That Adult is the parent or lawful guardian of the child.

This must be confirmed with evidence, not assumptions.

Invalid method:

- “I am the parent” checkboxes

- OTP-only verification

- Basic email/phone verification

- Self-attestation without identity proof

What is valid:

Verification linked to identity and age information from reliable or government-recognised sources.

2. Sources of Identity and Age Verification (Rule 10(1)(b))

Rule 10 allows only three approved verification sources:

A. Information already held by the Data Fiduciary

This works if:

- The parent is already a user

- The platform already holds reliable identity and age information

This requires strong identity records (KYC-level or equivalent).

B. Information voluntarily provided by the parent

This can include:

- Government-issued identity documents

- Digi Locker credentials

- Verified identity tokens

- Any ID-based age validation issued through an authorised process

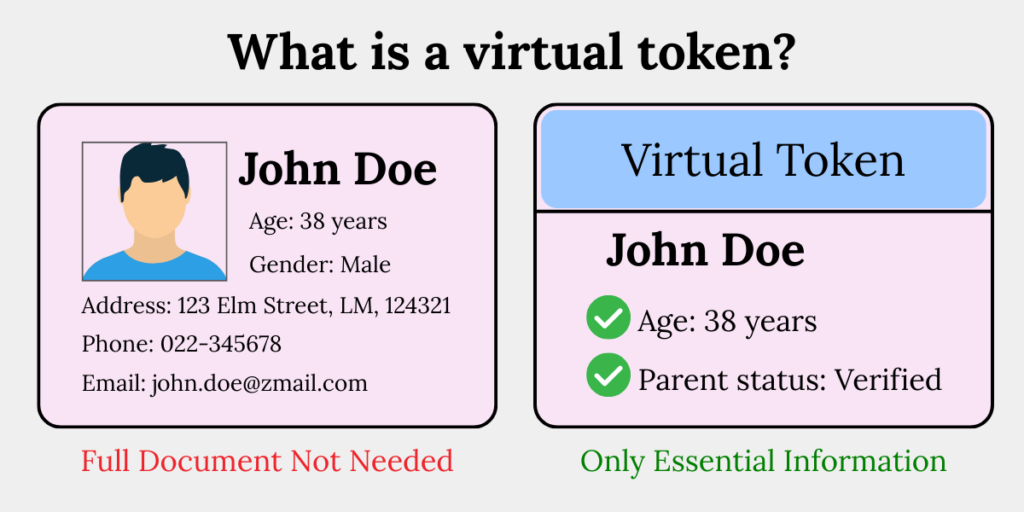

C. A virtual token linked to identity/age information

This is important.

A virtual token is a privacy-preserving way to share identity or age data without sharing full documents.

A virtual token is like showing the ticket stub instead of the whole ID card — it proves what’s needed without revealing everything.

Example: Parent shares a Digi Locker token → Data Fiduciary verifies it → Identity and age confirmed → Consent accepted.

3. Who Can Issue These Tokens? (Rule 10(2))

Rule 10 defines three key terms:

A. “Adult”

Anyone 18 years or older.

The DPDP Act treats individuals above this age as capable of understanding their responsibilities and providing legally valid consent for a child’s data processing.

B. “Authorised Entity”

Any entity authorised under law or notified by the government to:

- Maintain identity/age data, or

- Issue virtual tokens based on such data

It also includes any entity appointed by them to issue these tokens.

Examples include:

- Government departments that maintain identity databases (e.g., those issuing birth certificates or age records)

- UIDAI-integrated verification platforms authorised to issue age/identity tokens

- Government-approved digital identity partners that provide verified age credentials

C. “Digital Locker Service Provider”

Recognised under the IT Act as an official provider of secure digital documents and tokens.

This means Digi Locker and similar notified systems can provide verified identity/age proofs.

4. Evidence and Logging Requirements

Rule 10 doesn’t directly spell out logging requirements, but combined with other DPDP obligations, Data Fiduciaries must keep:

- Verification method used

- Source of identity/age info

- Token details

- Date and time of verification

- Linkage between child and parent

- Logs for audit and investigation

This is necessary because the Data Fiduciary carries the burden of proof if the Board asks for evidence.

It’s the compliance version of “pics or it didn’t happen.”

5. Relationship Validation

Rule 10 requires verifying not just age, but also parent–child relationship.

This means Data Fiduciaries must ensure the person giving consent is:

- The legal parent, or

- The lawful guardian

Acceptable relationship proof can include:

- DigiLocker documents showing dependents

- Government records (where available)

- Verified family details from authorised systems

- Guardianship certificates (for non-parents)

This is a higher standard than most global data protection laws.

6. Non-Negotiable Prohibitions

Even after consent is verified, the following cannot be done:

- Tracking – Following a child’s activity across apps or websites to understand what they do, where they click, or how they behave online is strictly prohibited.

- Behavioural monitoring – Watching how a child uses a platform to predict interests, habits, or patterns — even if the goal is product improvement, is not allowed.

- Targeted advertising – Showing ads based on a child’s behaviour, preferences, browsing history, or interaction data is a violation of Section 9(3) under the DPDP Act.

- Profiling aimed at children – Data fiduciaries cannot build any kind of digital profile or category about a child — such as “likely gamer,” “interested in toys,” or “high-risk user.”

These activities are banned outright to protect children online.

Penalty for violation: Up to ₹200 crore.

How Rule 10 Works in Practice?

These examples clarify how Rule 10 works in real situations.

| Scenario | What Happens | Verification Needed | Correct Action |

| 1. Child signs up, parent already verified | Child declares they are a minor → names parent | DF checks stored identity and age info of parent | Confirm adult parent → allow account |

| 2. Child signs up, parent not verified | Child declares minority | DF must verify parent through DigiLocker or Authorised Entity token | Validate token → confirm identity → allow processing |

| 3. Existing parent creates child’s account | Parent already a verified user | DF checks stored identity-age data | Approve child account creation |

| 4. New parent creates child’s account | Parent is new to platform | DF verifies identity-age using authorised entity information or token | Verify → log → allow onboarding |

The purpose of these examples is simple:

Verification must be reliable, provable, and tied to identity records.

What This Means for Data Fiduciaries

Processing children’s data now carries higher legal and operational risk.

To stay compliant, platforms must rebuild their child-data journeys with stronger checks and evidence-backed verification.

Platforms must redesign their systems to:

- Detect child users early

- Trigger mandatory verification flows

- Integrate with Authorised Entities like DigiLocker

- Store proof of verification

- Disable all tracking and ad-tech for child accounts

- Flag child-data processing in DPIAs

- Apply stronger security measures

This is not a cosmetic update — it requires structural change.

In other words: get it right the first time or be ready for conversations with the Board you definitely won’t like.

Compliance Roadmap for Rule 10

1. Map where child data is collected

Identify every touchpoint where your platform collects information from children — from signup screens to background analytics — so you know exactly what needs protection.

2. Implement age-gating at all entry points

Add prompts or checks that help your system detect when a user may be a child, so the correct verification flow is triggered immediately.

3. Integrate DigiLocker/token-based verification

Connect your platform with authorised identity services so parents can share verified age and identity information through secure digital tokens.

4. Build parent–child account linking

Create a workflow that connects the child’s account to the verified parent or guardian, ensuring all permissions and actions are tied to the correct adult.

5. Draft simple, child-specific privacy notices

Prepare clear, easy-to-understand notices that explain how your platform handles a child’s data and what the parent is consenting to.

6. Separate child accounts from adult experiences

Design a safer version of your platform for children, removing features that rely on tracking, profiling, or targeted engagement.

7. Turn off tracking and personalised ads

Disable all systems that follow behaviour, analyse patterns, or serve targeted content — these activities are strictly prohibited for children.

Design a safer version of your platform for children, removing features that rely on tracking, profiling, or targeted engagement.

8. Maintain audit-ready verification logs

Store evidence of verification securely, including the method used, identity source, and timestamps, so you can produce proof whenever required.

9. Train teams on Rule 10 processes

Make sure product, engineering, customer support, and compliance teams understand how Rule 10 works and what steps must always be followed.

10. Conduct DPIAs for high-risk use cases

Review any activity involving children’s data through a Data Protection Impact Assessment to identify risks and ensure your safeguards are strong enough.

Think of child data compliance as its own dedicated workflow. Implementing it early is always easier than explaining gaps during an audit.

Conclusion

Rule 10 sets a clear and strict framework for processing children’s personal data.

It demands strong identity checks, reliable verification systems, and complete traceability.

In short, Rule 10 wants every Data Fiduciary to build a digital environment where children are protected by design — and where consent is backed by evidence, not assumptions.

Or think of it this way:

You’re building a safer online space for children — with identity tokens, clean logs, and accountability built in from day one.

Key Takeaways

- Rule 10 requires Data Fiduciaries to collect a child’s data only after verifying that consent comes from a real, identifiable parent or lawful guardian.

- Verification must rely on reliable sources like existing records, parent-provided documents, or virtual tokens issued by authorised entities such as DigiLocker.

- Platforms must confirm both the adult’s identity and the parent–child relationship and maintain detailed logs as evidence of compliance.

- The DPDP Act strictly prohibits tracking, behavioural monitoring, profiling, and targeted advertising for children, even with parental consent.

- Compliance with Rule 10 demands system-level changes—age-gating, verification flows, child-specific experiences, audit trails, and DPIAs for high-risk processing.